Jon Smirl

After quitting work on Xgl I received a lot of email and read a lot of posts. My conclusion is that most people don’t really know what is going on with graphics in Linux. It’s understandable that people don’t see the whole picture. Graphics is a large and complex area with many software components and competing developer groups. I’ve written this as an attempt to explain how all of the parts fit together.

1. History.

This year is the X server’s twenty-first birthday. Started in 1984 out of Project Athena it has served the Unix community well over the years. X is in widespread use and powers most Linux desktops today. The Wikipedia article provides much more detail but two major X innovations are being open source, which enabled cross platform support, and network transparency.

This year is the X server’s twenty-first birthday. Started in 1984 out of Project Athena it has served the Unix community well over the years. X is in widespread use and powers most Linux desktops today. The Wikipedia article provides much more detail but two major X innovations are being open source, which enabled cross platform support, and network transparency.

However, it has been twenty years since X was designed and video hardware has changed. If you look at the floor plan of a modern video chip you will notice a small section labeled 2D. That’s because 90% of the chip is dedicated to the 3D pipeline. You just paid for that 3D hardware so wouldn’t it be nice if the desktop used it. A lot of people don’t realize that 3D hardware can draw 2D desktops. Look at your screen, it’s a flat 2D surface, right? Any picture generated by the 3D hardware ends up as a flat 2D screen. This should lead you to the conclusion that with the appropriate programming 3D hardware can draw 2D desktops.

2. Graphics Vendors.

Years ago graphics chip vendors were proud to give out data sheets and programming info for their hardware. But as the patent libraries grew the fear of infringement suits grew too. Now the graphics chip vendors hide all of the information about their chips as a way of making it harder for patent holders to search for infringements. At least this is the story the vendors give as their reason for hiding programming specs. They also claim that hidden specs makes it harder for competitors to reverse engineer the chips, but I have my doubts about that too. All of these hidden specs make it very hard to write open source device drivers for these chips. Instead we are being forced to rely on vendor provided drivers for the newest hardware. Just in case you haven’t, noticed graphics vendors really only care about MS Windows so they do the minimum driver support they can get away with for Linux. There are exceptions. Intel provides open source drivers for some of their chips. Nvidia/ATI provides proprietary drivers but they lag the Windows versions. The overall result is a very mixed set of drivers ranging in quality from decent to non-existent.

3. Desktop Alternatives.

The desktop alternatives, Windows and Mac, both have GPU accelerated desktops. They are obviously, visibly better than their predecessors. In some cases these new accelerated desktops can paint over one hundred times faster than under the old model. At some future point the graphics chip vendors are going to remove that dot labeled 2D and only leave us only with 3D hardware. Microsoft and Apple seem to have already gotten the message that 3D is the path forward and are making the transition. On the other hand, several of the X developers have told me to stop talking about the competitive landscape. They say all they want to do is make X better. While X developers may not be concerned, I’m certain that executives at Redhat and Novell won’t agree with this when they start losing sales because of a non-competitive GUI

Using 3D for the desktop is not just about making more eye candy. A lot of the 3D generated eye candy may just be glitz but there are also valid reasons for using 3D. 3D is simply faster than 2D, no one is making their 2D functions faster, all of silicon engineering is going into 3D. You can do fast, arbitrary image processing for things like color space conversion, stretching/warping, etc. I’ve seen some extremely complex filtering done in real time with shader hardware that would take the main CPU several seconds a frame to do. Support for heterogeneous window depths (simultaneous 8, 16, 24-bit windows) with arbitrary colormaps. On-the-fly screen flipping/rotation for projectors, and whole-screen scaling for the visual impaired, etc. Resolution independence allows objects to be rendered at arbitrary resolution/size and down/up-sampled when shown on-screen. More interesting applications are described later in the windowing section.

4. Current X.org Server.

X.org is about to release X11R7. The primary feature of this release is the modularization of the X source code base. While modularization doesn’t mean anything to most users, it will make it much easier to work on the X source code. Before modularization the X source tree consisted of about 16M lines of code all built as a single project. After modularization the tree will be split into dozens of independent pieces, making the project much easier to build and understand.

X.org is about to release X11R7. The primary feature of this release is the modularization of the X source code base. While modularization doesn’t mean anything to most users, it will make it much easier to work on the X source code. Before modularization the X source tree consisted of about 16M lines of code all built as a single project. After modularization the tree will be split into dozens of independent pieces, making the project much easier to build and understand.

5. X, the Operating System.

Is X an application or an operating system? A good reference for understanding how an X server is put together is here, it’s 8 years old but most of it is still relevant. Read it if you don’t know what DIX, mi, DDX, CFB, etc mean. Somewhere around X11R6.3 Xfree86 split off and the X serverdesign changed to become extremely cross platform. The various operating systems targeted by X have different levels of support for things like hardware probing. To address this X added code to probe the PCI bus to find hardware, code to find video ROMs and run them to reset the hardware, find mice and keyboards and provide drivers, to manage the problems of multiple VGA devices and ultimately even provide its own module loader. This is the stage where X started blurring the line as to whether it was an application or an operating system. While some operating environments desperately needed this OS-like support, implementing these features on operating systems that provided the same services, like Linux, ultimately ended up in the current day conflicts between X and the OS. Of course operating systems are moving targets decisions that made sense ten years ago may not make sense today.

The Linux kernel offers subsystems like PCI and framebuffer that are not present on BSD and some other platforms. In the interest of cross platform support the X server has built parallel implementations of these subsystems. In the past there was a need for this but on current Linux systems this results in two different pieces of software trying to control the same hardware. Linux has multiple non-X users of the video hardware whose only point of interaction with X is the kernel. The kernel provides many mechanisms for coordination of these users; things like the PCI subsystem, input drivers, hotplug detection, and device drivers. In the interest of coordination on Linux, the best solution is to make use of the features offered by the kernel and run these duplicated libraries only on the other platforms. Fbdev and XAA drivers are a prime example of duplicated drivers.

Linux has an excellent hotplug system and the graphics system really needs to start using it. Screens can be hotplugged from multiple sources. There is the traditional source of hotplug; someone sticks a new video card into a hotplug chassis. But there are other non-traditional ways to get a hotplug. Another user could be using a screen you want to attach to your screen group and when they log out you get a screen hotplug. You could attach an external monitor to a laptop and generate a hotplug. You could wirelessly connect to a display wall with something like DMX orChromium. USB is also the source of a lot of hotplug. A user can hotplug mice, tablets, keyboards, audio devices and even graphics adapters. The current X server handles none of this since it does not communicate with the kernel’s hotplug system.

A standard Linux desktop uses rooted X. Rooted X means that X controls the drawing of the top level desktop and the windows placed on it. Cygwin/X, Directfb and Apple Darwin all use rootless X. These environment have another windowing system in charge of the display. These host windowing systems draw the main desktop and implement their own windowing API. X can be integrated into a host windowing system like this by running in rootless mode. In rootless mode X application windows are drawn to buffers in system memory. At the appropriate times the X windows are synchronized with the host windowing system and their contents are displayed. Rootless X also provides protocols for handing off mouse and keyboard events between the two environments.

6. X Render.

The X Render extension. In the year 2000 KeithP declared the existing X server incapable of efficiently drawing clear, antialiased text and came up with the X Render extension to address the problem. X Render is the core X feature enabling things like pretty fonts and Cairo to be implemented on the X server. X Render adds Porter-Duff operators to the X server. These operators allow surfaces to be combined in various ways. They are very similar to the OpenGL concepts of textures and texture operations, but they are similar and don’t match exactly.

The X Render extension. In the year 2000 KeithP declared the existing X server incapable of efficiently drawing clear, antialiased text and came up with the X Render extension to address the problem. X Render is the core X feature enabling things like pretty fonts and Cairo to be implemented on the X server. X Render adds Porter-Duff operators to the X server. These operators allow surfaces to be combined in various ways. They are very similar to the OpenGL concepts of textures and texture operations, but they are similar and don’t match exactly.

7. XAA.

XAA, X Acceleration Architecture was introduced in XFree86 4.0. To achieve cross platform portability XAA implements 2D video drivers in user space without using kernel device drivers. User space drivers do work but since there are no kernel based drivers the Linux kernel has no way to track what X is doing to the hardware. X doesn’t start running until after your system has booted. You’d like to have a display during the boot process in case something goes wrong with starting X, right? Boot display on Linux is implemented with text mode (console) drivers, the most common of which is VGAcon. So under Linux you end up with two device drivers, X and console, both trying to control the same piece of hardware. The combination of XAA, console and the Linux kernel feature of Virtual Terminals can result in a lot of conflicts, but more on this later.

8. EXA.

EXA replaces the existing 2D XAA drivers allowing the current server model to work a while longer. EXA extends the XAA driver concept to use the 3D hardware to accelerate the X Render extension. EXA was originally presented as being a solution for all hardware including old hardware. But this isn’t true. If the old hardware is missing the hardware needed to accelerate render there is nothing EXA can do to help. So it ends up that the hardware EXA works on is the same hardware we already had existing OpenGL drivers for. The EXA driver programs the 3D hardware from the 2D XAA driver adding yet another conflicting user to the long line of programs all trying to use the video hardware at the same time. End result is that EXA is just a bandaid that will keep the old X server code going another year or two. There is also a danger that EXA will keep expanding to expose more of the chip’s 3D capabilities. The EXA bandaid will work but it is not a long term fix. Its existence also serves to delay the creation of a long term fix. A key point to remember is that EXA/render is still just a subset of the broader Mesa OpenGL API and over time we always want more and more features.

9. Cairo.

Cairo’s goal is to be a high quality 2D drawing API that is equally good for printing and screens. It is designed with the X Render extension in mind and it implements the PDF 1.4 imaging model. Interesting operations include stroking and filling Bézier cubic splines, transforming and compositing translucent images, and antialiased text rendering. The Cairo design is portable and allows pluggable drawing backends such as image, glitz, png, ps, PDF, svg, quartz, GDI and xlib. Cairo has been under development for about two years now and should see deployment in upcoming versions of GDK and Mozilla. Cairo’s main goal is to make it easier for developers to produce high end 2D graphics on the screen and then be able to easily print them. The pluggable backends allow an application to use the same code to draw and print.

One of the Cairo backends, named glitz, implements Cairo using OpenGL. There is a good paper that explains glitz in detail. Since Cairo implements both an xlib and OpenGL backend a direct performance comparison can be done between xlib and OpenGL. In the benchmarks that have been published, OpenGL beats XAA anywhere from ten to hundred to one in speed. This large performance differential is due to glitz/OpenGL’s use of the 3D hardware on the graphics chip. Comparing Cairo on glitz and xlib is a good way to illustrate that 3D hardware is perfectly capable of drawing 2D screens.

10. DRI and OpenGL.

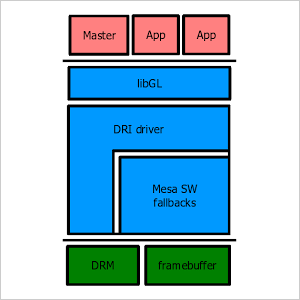

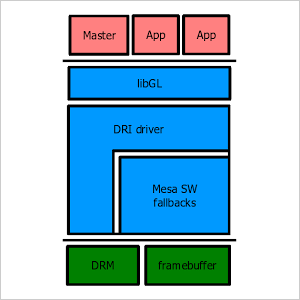

DRI, Direct Rendering Infrastructure, implements OpenGL in cooperation with the X server. DRI has four maincomponents. First, libGL which provides the OpenGL API and acts a switch between multiple drivers. Next there is the hardware specific DRI library which programs the graphics chip. For OpenGL features not provided by the DRI driver you need a software fallback implementation. Fallback is provided byMesa. Note that Mesa is a complete OpenGL implementation in software. A card that provides no acceleration can still implement OpenGL by falling back to Mesa for every function. Finally, there isDRM, the direct rendering manager. DRM drivers run in the kernel, managing the hardware and providing needed security protection.

DRI, Direct Rendering Infrastructure, implements OpenGL in cooperation with the X server. DRI has four maincomponents. First, libGL which provides the OpenGL API and acts a switch between multiple drivers. Next there is the hardware specific DRI library which programs the graphics chip. For OpenGL features not provided by the DRI driver you need a software fallback implementation. Fallback is provided byMesa. Note that Mesa is a complete OpenGL implementation in software. A card that provides no acceleration can still implement OpenGL by falling back to Mesa for every function. Finally, there isDRM, the direct rendering manager. DRM drivers run in the kernel, managing the hardware and providing needed security protection.

An interesting aspect of DRI is the ‘direct’ part of the name. Each application using DRI directly programs the video hardware. This is different than X where you send drawing commands to the server and the server programs the hardware on your behalf. The DRM kernel driver coordinates the multiple users to stop interference. The advantage to this model is that OpenGL drawing can occur without the overhead of process swaps and data transmission to a controlling server. One disadvantage is that the graphics card may have to deal with a lot of graphics context switches. The workstation cards seem to do a decent job of this, but some consumer cards do not. Microsoft has already encountered this problem and requires advanced hardware context switching support for DirectX 10 capable hardware.

DRM also implements the concept of a master user with more capabilities than normal users. These extra capabilities allow the graphics device to be initialized and the consumption of GPU resources to be controlled. The current X server, which runs as root, functions as the DRM master. There is actually no real requirement that the DRM master be run as root, and there is a preliminary patch that removes this requirement.

When the hardware is missing a feature, Mesa implements the feature in software. This is termed a software fallback. People are confused by this. They say their OpenGL isn’t fully accelerated and their X server is. Think about it. Both drivers are running on the same hardware. OpenGL isn’t fully accelerated because it offers many features capable of acceleration while X only offers a few. If you pick features that are in both driver APIs, they will probably both be accelerated. If they are not, start programming and go fix the appropriate driver.

11. Security and root.

The Linux kernel contains about 10M lines of code that runs as root. The X server contains 16M lines of code of which a lot runs as root. If you’re looking for security holes, where do you have better odds? There is no technical reason requiring the X server to run as root. In the name of cross platform compatibility, the current X Server runs as root in order to program the video hardware from user space. Linux has a solution for this. You put the privileged code into a device driver and run the user space application without privilege. The privileged device driver for an average video card runs about 100KB. That is a whole lot less code to audit than 16M lines.

12. Older hardware.

Any video hardware, even a dumbVGA adapter, can run the X server, and Mesa will happily implement the entire OpenGL API in software. The question is how fast. No amount of programming is going to turn a VGA card into an ATI X850. This old hardware is well served by the current X server. But new systems are being designed around new hardware and they may perform badly on old hardware. You may want to consider upgrading your video hardware; graphics cards capable of decent OpenGL performance can be bought brand new for $40 and for even less used. Alternatively, you may choose not to upgrade and to continue running the same software that has served you well in the past.

Any video hardware, even a dumbVGA adapter, can run the X server, and Mesa will happily implement the entire OpenGL API in software. The question is how fast. No amount of programming is going to turn a VGA card into an ATI X850. This old hardware is well served by the current X server. But new systems are being designed around new hardware and they may perform badly on old hardware. You may want to consider upgrading your video hardware; graphics cards capable of decent OpenGL performance can be bought brand new for $40 and for even less used. Alternatively, you may choose not to upgrade and to continue running the same software that has served you well in the past.

13. Kernel Graphics Support.

When the kernel firsts boots, you see the boot console. On the x86,the most common boot console is VGAcon. VGAcon uses the legacy hardware VGA support of your graphics card. Since most all x86 graphics cards support VGA, VGAcon provides a universal console for this platform. On non-x86 platforms you may not have VGA hardware support. On these platforms you usually load a chip specific framebuffer driver. The kernel offers numerous fbdev drivers so a very broad range of hardware is supported.

14. VGA Legacy.

The original design of the IBM PC had a somewhat limited set of peripherals located at fixed, well known addresses, for example COM1 at 0x3F8. Unfortunately, the VGA support on most graphics cards is one of those legacy devices located at a fixed address. As long as you only have one graphics card in your system, VGA is not a problem. Plug in a second one and now you have two pieces of hardware both wanting to occupy the same bus address.

The original design of the IBM PC had a somewhat limited set of peripherals located at fixed, well known addresses, for example COM1 at 0x3F8. Unfortunately, the VGA support on most graphics cards is one of those legacy devices located at a fixed address. As long as you only have one graphics card in your system, VGA is not a problem. Plug in a second one and now you have two pieces of hardware both wanting to occupy the same bus address.

The current X server has code to deal with multiple VGA adapters. But this X server code is unaware of other users of these devices and it will stomp all over them. Stomping on these other programs is not nice, so we need some way to coordinate multiple users of the VGA device. On Linux the best solution for this is to add a VGA arbitration mechanism to the kernel. BenH has been working on one that was discussed at OLS.

Another problem is the initialization of multiple video cards. Video cards have ROMs, often referred to as VBIOS, which are executed at boot to initialize the hardware. Due to legacy issues, many of these initializations make use of VGA support. Since we can only have one legacy VGA device, the system BIOS punts the problem and only initializes the first video card. It is possible to initialize secondary cards at a later point during boot. This is achieved by running the secondary VBIOS using vm86 mode and coordinating use of the single legacy VGA device. To make matters more complicated there are two common ROM formats x86 code and Open Firmware. Since vendors charge a lot more for their Open Firmware models it is common for people to use x86 video hardware in non-x86 machines. To make this work the VBIOS’s have to be run using an x86 emulator. BenH is working on a solution for this problem too.

Fbdev, also referred to as framebuffer. Under Linux, framebuffer is primarily responsible for: initializing the hardware, detecting attached displays, determining their valid modes, setting the scanout mode and config, hardware cursor, blanking, color mapping, pan and suspend/resume. There are a lot of framebuffer drivers in the kernel and they implement various states of support on these features. Note that while the interface to fbdev is kernel based, there is nothing stopping fbdev support from having helper apps implemented in user space. Helper apps could be used to make VBIOS calls in situations where source code is not available.

When you run a VBIOS it creates a set of very low level entry points which can be used to control the display. This is getting very detailed and I won’t explain all of the differences between VGA,Int10 andVESA. As an overview, VGA is the base hardware register standard established by IBM. Int10 refers to using software interrupts to perform display output. Software interrupts only work in x86 real mode, and are not commonly used any more except by things like GRUB. The code to support Int10 comes from the VBIOS and is installed when the ROM is run. There can only be one installed Int10 driver. VESA took the Int10 model and extended it to work in protected mode. When configuring the kernel there are three drivers: VGA and VESA fbdev, which also need fbconsole loaded to run, and VGAcon which is all inclusive. On non-x86 you usually need fbdev and fbconsole.

15. Making multiuser work.

Linux is a multiuser operating system. It has always been that way. But the only way to connect multiple users has been over serial lines and the network. What happens if you install multiple video cards and run multiuser locally? It doesn’t work. There have been patches to try and make this work such as the Linux console project and various hacks on X. The problem is that the Linux console Virtual Terminal system controls the local video cards and it effectively only supports a single user. Note that fbdev works fine when used by multiple users, it is the VT system that is single user.

Multiple local users have application in places like schools, monitoring systems, internet cafes and even at home. Past demand for multiple video card support has not been that high due to the common PC only supporting a single AGP slot. Multiple PCI video cards do work but their performance is not high. There are high end machines with multiple AGP slots but they aren’t very common. PCI express changes this. With PCI express, all slots are functionally equal. The only thing that varies is how many lanes go to each slot. This hardware change makes it easy to build systems with multiple, high performance video cards. PCIe support chips are planned that can allow up to sixteen video cards in a single system.

Multiple local users have application in places like schools, monitoring systems, internet cafes and even at home. Past demand for multiple video card support has not been that high due to the common PC only supporting a single AGP slot. Multiple PCI video cards do work but their performance is not high. There are high end machines with multiple AGP slots but they aren’t very common. PCI express changes this. With PCI express, all slots are functionally equal. The only thing that varies is how many lanes go to each slot. This hardware change makes it easy to build systems with multiple, high performance video cards. PCIe support chips are planned that can allow up to sixteen video cards in a single system.

16. Splitting console.

When analyzing the console system you will soon notice that there are two different classes of console use. System console, which provides the boot display and is used for system error report, recovery and maintenance. And user console, a normal display that a user logs in to and performs command line activities or editing. In the current Linux console system, both uses are serviced by the same console code.

It is possible to split the console code up depending on type of use and fix a lot of the current issues with the Linux console. To begin, the system console should be totally reliable and tamper proof. It does not have to be fast. It needs to be implemented with the simplest code possible and it needs to work at interrupt time and from kernel panics. Uses include system recovery and single user mode. Boot time display and kdbg support are also possible. System console would provides SAK and the secure login screen. To support independent users logged into each head of a video card it needs to be implemented independently on each head. One way to access the new system console would be to hit SysReq, the console will overlay your current display and use your current video mode. The Novell kernel debugger works this way. System console does not support VTs and there are no console swaps. Since it knows your video mode, it can preempt your display in emergency, for example a fatal kernel OOPs.

The design for system console uses fbdev to track the mode and where the scanout buffer is located. To make it as reliable as possible all acceleration support is removed. Fbconsole then uses the system CPU to directly manipulate the framebuffer. The console is displayed by drawing directly into the scanout buffer using the existing bitmap font support in fbconsole.

The user console is the opposite of system console, it needs to be high performance and user friendly. A user space implementation makes it easy to handle multiuser by creating a process for each user. User space allows full GPU based acceleration via fbdev and DRM. You also have easy access to Xft/FreeType for full Unicode support. With proper design the console can even allow different users on each head. By coding the appropriate hot keys it can be made to behave just like existing VTs and support console swaps.

Since the current consoles are combined, when you VT switch you get both types. In the new model current VT swap keys would give you user space consoles. SysReq would activate the system console. The shell process attached to the system console could run at high priority making it easier to take back control from an errant process.

17. Grouping Hardware.

Local multiuser support implies that Linux will implement the concept of console groups for UI oriented peripherals. Console groups are collections of hardware that go together to form a login console. An example group would include a display, mouse, keyboard and audio device. At login, PAM assigns ownership of these devices to the logged in user. An interesting add-on to this concept is to include a USB hub or port as part of the console group. While the user is logged on, anything plugged into the USB port will also belong to them. At logout everything goes back into the unassigned pool.

18. Alternate alternatives.

The little guys:directfb,svglib,fresco,Y Windows,FBUI, etc. Linux attracts a lot of people that want to experiment with display code. In the current VT model these other display systems cause a lot problems on VT swap. After swapping VTs, the newly activated display system is allowed to do anything it wants to the hardware. That includes reinitializing it, reprogramming it and wiping its VRAM. When you swap back, the original system is expected to recover from the altered hardware state. It’s not just the little guys that can cause problems with VT swap. You can swap between the console system and X or even two desktops like X and Xegl. This was probably a fine model for VGA adapters with 14 registers and 32KB of VRAM. It is not a good model for a video card with 300 registers, 512MB of VRAM and an independent GPU coprocessor.

The little guys:directfb,svglib,fresco,Y Windows,FBUI, etc. Linux attracts a lot of people that want to experiment with display code. In the current VT model these other display systems cause a lot problems on VT swap. After swapping VTs, the newly activated display system is allowed to do anything it wants to the hardware. That includes reinitializing it, reprogramming it and wiping its VRAM. When you swap back, the original system is expected to recover from the altered hardware state. It’s not just the little guys that can cause problems with VT swap. You can swap between the console system and X or even two desktops like X and Xegl. This was probably a fine model for VGA adapters with 14 registers and 32KB of VRAM. It is not a good model for a video card with 300 registers, 512MB of VRAM and an independent GPU coprocessor.

19. Cooperating Effectively.

I believe the best solution to this problem is for the kernel to provide a single, comprehensive device driver for each piece of video hardware. This means that conflicting drivers like fbdev and DRM must be merged into a cooperating system. It also means that poking hardware from user space while a kernel based device driver is loaded should be prevented. I suspect that if Linux offered comprehensive standard drivers for the various video cards, much of the desire to build yet another version of the Radeon driver would disappear.

This does not mean that projects like fresco can’t develop their own device drivers for the video hardware. All this means is that you will have to unload the standard driver and then load your custom driver before running the new program. This unload/load behavior is no different than that of every other driver in the kernel. Using a hot key to jump (VT swap) between two active video device drivers for the same piece of hardware would no longer be supported. If a base driver is missing needed features a better approach would be to submit patches to the standard driver. By implementing needed extensions in the standard driver all programs can share them and it would be easy to swap between apps using them with the user space console system

If we are going to keep the VT design of having a hot key to jump between video drivers, I think it is only fair that a hot key for jumping between disk and network drivers also be implemented.

20. OpenGL|ES.

Khronos Group is a new standards group formed by over one hundred member corporations. Khronos Group’s most successful standard is OpenGL ES. OpenGL ES defines a very useful subset of OpenGL targeted at low memory systems. It also definesEGL which is a platform independent equivalent to the OpenGL GLX/agl/wgl APIs. “EGL provides mechanisms for creating rendering surfaces onto which client APIs like OpenGL ES and OpenVG can draw, create graphics contexts for client APIs, and synchronize drawing by client APIs as well as native platform rendering APIs. This enables seamless rendering using both OpenGL ES and OpenVG for high-performance, accelerated, mixed-mode 2D and 3D rendering.”

Khronos Group is a new standards group formed by over one hundred member corporations. Khronos Group’s most successful standard is OpenGL ES. OpenGL ES defines a very useful subset of OpenGL targeted at low memory systems. It also definesEGL which is a platform independent equivalent to the OpenGL GLX/agl/wgl APIs. “EGL provides mechanisms for creating rendering surfaces onto which client APIs like OpenGL ES and OpenVG can draw, create graphics contexts for client APIs, and synchronize drawing by client APIs as well as native platform rendering APIs. This enables seamless rendering using both OpenGL ES and OpenVG for high-performance, accelerated, mixed-mode 2D and 3D rendering.”

EGL assumes the existence of a windowing system provided by other parts of the operating system. But EGL’s design is platform independent and nothing in the EGL API is specific to a windowing system unlike GLX/agl/wgl. All references to a local windowing system are handled with opaque pointers.

Mesa developers have put together a proposal for extending EGL so that a windowing system can be implemented on top of EGL. The core of this extension provides an API to enumerate the available screens, set modes and framebuffer configs on a screen, pan the screen, and query attributes. Two areas that still need to be addressed by EGL extensions include hardware cursor support and color mapping. Adding these extensions to EGL provides enough control over the hardware to implement a server and windowing system like Xegl. OpenGL plus EGL and the Mesa extensions provides a truly portable API for accessing many forms of graphics hardware ranging from current cell phones to the Playstation 3 to PCs to graphics supercomputers.

The Extended EGL API is well matched to Linux. It provides a solid foundation to construct windowing systems or embedded apps. It’s easy to use making it a fun platform for R&D and experimentation. It lets you focus on your new app or windowing system and forget about all of the complexities of dealing with the hardware.

I believe that Khronos Group represents a major untapped opportunity for the X.org and Linux graphics communities. Most of the the Khronos standards are lacking open source reference implementations, development system support, and conformance tests. Many of the Khronos Group backers sell production systems based on Linux. If X.org were to expand its charter, it could approach Khronos Group about being a neutral non-profit vehicle for building open source reference implementations and development systems based on Linux and the Khronos standards. The partnership would allow Khronos Group members to make charitable contributions to X.org similar to IBM and the Eclipse Foundation.

21. Pixel Perfect.

Pixel perfect accuracy is a myth. All you can do is achieve degrees of accuracy. Sources of errors are everywhere. The evenness of your LCD’s backlight, the consistency of printer ink, the quality of the DACs, the reflectivity of paper, problems with color matching, different drawing algorithms implement in the GPU, etc. OpenGL does not guarantee pixel perfect accuracy between implementations. The closest you are going to get to pixel perfection is to use exactly the same version of software Mesa on each of your targets. By the way, the X server isn’t pixel accurate either. My rule of thumb is that if it take a magnifying glass to tell the difference it is close enough. People get confused about this point. If you hand OpenGL a bitmap to display it is going to copy those pixels to the screen unchanged unless you tell it to change them. The pixel drawing accuracy argument applies more to scalable vector things like lines.

Subpixel antialiased fonts are not an issue, OpenGL offers multiple solutions for displaying subpixel, antialiased fonts. If you choose, OpenGL is capable of using the exact same mechanism for glyph display that the X server uses today. Since it is the same mechanism the glyphs will appear identical. It is also undesirable to lock drawing into a specified algorithm, doing so hampers forward progress. For example, this paper (video) explores a completely new way of generating fonts on the GPU. There is another interesting paper, "Resolution Independent Curve Rendering using Programmable Graphics Hardware" by Loop and Blinn, proceedings SIGGRAPH 2005 (no free link). If font drawing were specified with pixel perfect precision, it would probably be impossible to use these new techniques. These glyphs are generated by GPU and use algorithms that are built into the hardware and are not alterable by the user. Converting outline glyphs to pixels in order to render them as blended images is just one way to display glyphs. Programmable GPUs are providing new alternatives. Any long lived API will need to take this into account.

Subpixel antialiased fonts are not an issue, OpenGL offers multiple solutions for displaying subpixel, antialiased fonts. If you choose, OpenGL is capable of using the exact same mechanism for glyph display that the X server uses today. Since it is the same mechanism the glyphs will appear identical. It is also undesirable to lock drawing into a specified algorithm, doing so hampers forward progress. For example, this paper (video) explores a completely new way of generating fonts on the GPU. There is another interesting paper, "Resolution Independent Curve Rendering using Programmable Graphics Hardware" by Loop and Blinn, proceedings SIGGRAPH 2005 (no free link). If font drawing were specified with pixel perfect precision, it would probably be impossible to use these new techniques. These glyphs are generated by GPU and use algorithms that are built into the hardware and are not alterable by the user. Converting outline glyphs to pixels in order to render them as blended images is just one way to display glyphs. Programmable GPUs are providing new alternatives. Any long lived API will need to take this into account.

22. Three Generations of Windows.

In the current X server and many other windowing systems, windows are drawn onto the screen in clip regions using the painter’s algorithm. The painter’s algorithm works just like real paint – each successive layer obscures the previous layer. You draw the background first and then draw each window in reverse Z order. If there is no translucency and you know where all of the windows are going, you can optimize this algorithm (like X does) with clips rects so that each pixel on the screen is only painted once. Performance can be improved by tracking damage. Only the windows in the damaged area need to be redrawn and the rest of the screen is protected by clip regions. Further gains are made by ‘save unders’. The windowing system notes that things like menu popups repaint the same contents to the screen when dismissed. A ‘save under’ saves the screen under these popup windows and replaces it when done.

Composite takes advantage of performance enhancements in recent hardware. With composite, windows are drawn into off screen, non-visible video memory. The window manager then composites the windows together to form the visible screen. Compositing still uses the painter’s algorithm, but since the window manager always has all of the window contents, it is possible to implement translucent windows. Translucency is created by blending the contents of the screen under the window with the previous window contents as it is copied to the scanout buffer. This is calledalpha blending. Most modern hardware supports it. Drawing flicker is also eliminated by double buffering. In this model everything is still 2D and has a simple Z order.

Higher end multitexture hardware can avoid using the painter algorithm altogether. Each window intersecting the screen region of interest would be bound as a separate texture. You would then draw polygons to the screen representing the windows. These polygons would have appropriate texture coords at the polygon vertices for each window/texture, then the texture combiner hardware would combine all the windows/textures together and produce the expected result. In an extreme case, the entire screen could be redrawn by rendering a single multi-textured rectangle! This technique is extremely fast, you may not even need double buffering since the flicker is minimal.

Xgl implements the composite model. Although not required, Xgl will make use of a new OpenGL feature, framebuffer objects. With an extension to OpenGL, FBO ’s would allow very efficient sharing of the offscreen application windows with the windowing manager. The extension is not that hard, we already share pbuffers across processes. To an application, FBO’s look like a normal drawing window. To the windowing manager, the windows look like textures and can be manipulated with normal drawing commands like multitexture. In this model the application windows are still 2D, but now it is possible to write an OpenGL based windowing manger likeLuminosity. An OpenGL based window manger can combine windows using the Z buffer and remove the limits of strict 2D Z order. For example, a window can be transformed into a wave and repeatedly intersect another window.

Finally you can remove the 2D limitations on application windows and give them thickness or other 3D shapes. For example, a pop-up menu really could pop-up in higher 3D coordinates. When you combine this with a lighting source, drop shadows appear naturally instead of being artificially constructed as in 2D. Sun’sProject Looking Glass is this type of window manager.Window thickness is demonstrated in the Looking Glassdemo video. Looking Glass runs existing X applications using a rootless X server. The windows and then given thickness and combine with true 3D applications like the CD changer demo.

Finally you can remove the 2D limitations on application windows and give them thickness or other 3D shapes. For example, a pop-up menu really could pop-up in higher 3D coordinates. When you combine this with a lighting source, drop shadows appear naturally instead of being artificially constructed as in 2D. Sun’sProject Looking Glass is this type of window manager.Window thickness is demonstrated in the Looking Glassdemo video. Looking Glass runs existing X applications using a rootless X server. The windows and then given thickness and combine with true 3D applications like the CD changer demo.

The concept of a 3D desktop is not that strange. We have had a 3D desktop ever since we moved away from tiled windows in Windows 1.0 to overlapping ones - once Z order was introduced the desktop became a non-perspective 3D space. 3D concepts are all over the desktop, look at menus and pop-ups. A big reason for implementing composite was to provide drop shadows, which are obviously a 2D representation of a 3D space. Even controls like buttons are made to look 3D via 2D simulation. Why not draw them in 3D and let the user control the light source? Window translucency obviously involves 3D concepts. Why don’t we just admit that the the desktop is a 3D space and use 3D graphics to draw it?

23. Drawing the line.

There’s been some debate on this topic. Linux really only has one choice for making an open source transition to a GPU based desktop - the Mesa OpenGL implementation. Sure we could clone DirectX, but who is going to write all of that code and drivers? OpenGL is not a bad choice. Its a requirement to have it on Linux no matter what we build. It is standardized and controlled by an ARB (architecture review board). It is well designed and well documented. It is in wide spread distribution and use. Colleges teach classes on it and many books have been written about it. Cool apps and games run on it.

At what level should the graphics device driver line be drawn? XAA and fbdev draw the line at a low level. These APIs concern themselves with pixels in the framebuffer, bitblit, and maybe line drawing. Any features offered by the graphics chips about this level are either inaccessible or need chip specific API escapes to access. The Xgl implementation draws the line in a very different way. The line is drawn at an extremely high level of the OpenGL API itself. I believe the Xgl approach is the better choice. You want to draw the line way above the feature set currently implemented in silicon in order to allow the silicon room to evolve without disrupting the API. I don’t believe Linux would benefit from running through lots of APIs like DirectX did. The better model is to start really high level, provide the reference software implementation, Mesa, and then replace pieces of Mesa with accelerated hardware implementations, DRI. We also have multiple OpenGL implementations: Mesa, Nvidia and ATI. Which one you use is your choice, believe it or not some people like the proprietary drivers.

Xgl was designed as a near term transition solution. The Xgl model was to transparently replace the drawing system of the existing X server with a compatible one based on using OpenGL as a device driver. Xgl maintained all of the existing X APIs as primary APIs. No new X APIs were offered and none were deprecated. Xgl is a high level, cross platform code base. Is is generic code and needs to be ported to a specific OpenGL environment. One port, Xglx, exists for the GLX API. Another port,Xegl works on the cross platform EGL API. Note that EGL is a platform independent way of expressing the GLX, agl and wgl APIs. With the Mesa extensions EGL can control the low level framebuffer. This combination provides everything needed to implement a windowing system (like the current X server) on bare video hardware. But Xgl was a near term, transition design, by delaying demand for Xgl the EXA bandaid removes much of the need for it.

Xgl was designed as a near term transition solution. The Xgl model was to transparently replace the drawing system of the existing X server with a compatible one based on using OpenGL as a device driver. Xgl maintained all of the existing X APIs as primary APIs. No new X APIs were offered and none were deprecated. Xgl is a high level, cross platform code base. Is is generic code and needs to be ported to a specific OpenGL environment. One port, Xglx, exists for the GLX API. Another port,Xegl works on the cross platform EGL API. Note that EGL is a platform independent way of expressing the GLX, agl and wgl APIs. With the Mesa extensions EGL can control the low level framebuffer. This combination provides everything needed to implement a windowing system (like the current X server) on bare video hardware. But Xgl was a near term, transition design, by delaying demand for Xgl the EXA bandaid removes much of the need for it.

An argument that comes up over and over is that we should not use OpenGL because of all the older hardware in use in the developing world. There are two solutions to this problem. First, OpenGL is a scalable API. Via software fallbacks it is capable of running on the lowest level hardware. OpenGL ES also provides API profiles. Profiles are well defined subsets of the OpenGL API. If needed we could define a minimal profile covering the smallest possible OpenGL API. Code size should not be a problem, a proprietary 100KB OpenGL ES implementation is available. Another OpenGL ES profile does not require floating point support. Nothing is stopping open source equivalents from being built if we choose to spend the resources.

The other argument is to simply run software that was designed to work on the hardware in use. No one expects an original IBM PC to run Windows Longhorn but the same PC continues to run DOS without problems.

The key point here is that OpenGL is more scalable that EXA. EXA scalability is broken at the high end, it does not cover advanced GPU features like 3D and programmability. With OpenGL it is possible to have a single API all the way from a cell phone to a supercomputer.

24. Stacking the Blocks.

That’s a lot of acronyms to digest all at once. How about some examples on how the libraries work together:

That’s a lot of acronyms to digest all at once. How about some examples on how the libraries work together:

App -> gtk+ -> X -> XAA -> hw

This is the current X server. Application talks to toolkit which uses the xlib API for the X server. The X server draws on the hardware with the current XAA drivers. X and the application are in two different processes.

App -> gtk+ -> Cairo -> X Render -> X -> XAA/EXA -> hw

Toolkit using the new Cairo library. Cairo depends on X Render. If EXA is available X Render is accelerated. X and the application are in two different processes.

App -> qt -> Arthur -> X Render -> X -> XAA/EXA -> hw

Arthur is the Trolltech equivalent to Cairo, it behaves pretty much the same.

App -> gtk+ -> Cairo -> glitz -> GL -> hw

Toolkit using the new Cairo library. Cairo choses the glitz backend for OpenGL based direct rendering. Everything is accelerated and drawn from a single process due to direct rendered OpenGL.

App -> gtk+ -> Cairo -> X Render -> Xgl -> EGL(standalone) -> GL -> hw

In this case, the toolkit chose the xlib backend for Cairo and talks to the Xegl server with X Render. Xegl uses glitz to provide it’s render implementation. Glitz will direct render to the hardware. Xegl and the application are in two different processes. Note that the toolkit could have chosen to use glitz directly and direct rendered from a single process.

App -> gtk+ -> Cairo -> X Render -> Xgl -> GLX(X) -> GL -> hw

Toolkit is again talking X render to the Xglx sever. Xglx is not a standalone server, it is a nested server. Xglx is not a normal nested server, it uses nesting for input but it draws it’s entire desktop inside a single OpenGL window supplied by the parent server. If you go full screen you can’t see the parent server anymore. There are three processes, the app, Xglx and X server. The drawing happens between the app and Xglx due to Xglx using direct rendering. The third process, the X server is involved since it is providing the window and input. It also hosts the Xglx app.

25. A Future Direction.

Linux is now guaranteed to be the last major desktop to implement a desktop GUI that takes full advantage of the GPU. Given that there is no longer any time pressure, perhaps a longer term solution is in order. We could design a new server based around OpenGL and Cairo.

In general, the whole concept of programmable graphics hardware is not addressed in APIs like xlib and Cairo. This is a very important point. A major new GPU feature, programmability is simply not accessible from the current X APIs. OpenGL exposes this programmability via its shader language

When considering a new server be sure to split the conceptual design into two components: platform specific and platform independent. Device drivers are platform specific, in the proposed model OpenGL is considered a device driver so it will have a platform specific implementation. All of the issues of integration with other Linux subsystems will be hidden inside the appropriate drivers. The main server will be implemented using the cross platform APIs like sockets, OpenGL and EGL. A cross platform API for hotpluggable devices will need to be specified. The server would not need to be designed from scratch. Substantial portions of code could be reused from other projects and would be combined in new ways. From what I can see, 95 percent or more of the code needed for a new server already exists.

Modularization is key to making a good server. The design should be split into isolated libraries that communicate via standard interfaces. Splitting things this way makes it easy to replace a library. You may have completely different implementations of a library on different platforms, or there may be multiple ways to implement the library on a single platform. Of course, cross platform code has its advantages. Things like the Mesa library can be shared by all target systems.

Remember how DirectFB uses rootless X? The new server would run rootless X with software rendering to achieve legacy compatibility. The direct rendering model of DRI is a good design and should be retained in future servers. Moving X to a legacy mode allows complete freedom in a new server design.

Remember how DirectFB uses rootless X? The new server would run rootless X with software rendering to achieve legacy compatibility. The direct rendering model of DRI is a good design and should be retained in future servers. Moving X to a legacy mode allows complete freedom in a new server design.

For example, the new server can design a new networking protocol. The recent Linux Journal article about No Machine and the NX protocol shows that the X protocol can be compressed 200:1 or more. The new protocol would be OpenGL based and be designed to avoid round trip latencies and to provide a persistent image cache. Chromium is an interesting system that supports splitting OpenGL display over multiple monitors connected by a network. Their network library does OpenGL state tracking as one way of avoiding network traffic.

The glyph cache should also be analyzed. In the current X server, the client generates the glyph bitmaps and sends them to the server where they are cached. This model precludes GPU generated glyphs are described in the paper referenced earlier. Client-side fonts are a good idea. The application should definitely be in charge of layout. But there is no real requirement for the client side generation of glyph bitmaps. In the case of the scalable GPU generated fonts, more data than a simple bitmap needs to be sent to the server.

A new server could address current deficiencies in network sound and printing. It could support the appropriate proxying needed to reattach sessions. Is should definitely be designed to support multiuser from the beginning.

Event handling is a good example of building things with libraries. Linux has evdev, kernel hotplug, HAL andD-BUS. These subsystems don’t exist on most other platforms. Because of this, an input subsystem for BSD would look very different than one designed for Linux. Making the major subsystems modular lets them be customized and make full use of the environment they run in. This is a different approach than designing to the least common denominator between environments.

X is used by various intelligence agencies around the world. The current X server is known not to be secure enough for use on sensitive documents. If major design work were to happen, it could be built from the ground up with security in mind. Some appropriate funding would go a long ways in making sure the right features get implemented.

In the short term, there are big things that need to be done and recent development has started to address these issues. Number one is a memory manager for DRM and the rework needed to enable FBO (framebuffer objects) in the DRI drivers. Number two is clean up the fbdev drivers corresponding to the hardware we have DRM drivers for. With the ground work in place, work on a new server design could begin.

26. Conclusion.

My experience with the failure of Xegl has taught me that building a graphics subsystem is a large and complicated job, far too large for one or two people to tackle. As a whole, the X.org community barely has enough resources to build a single server. Splitting these resources over many paths only results in piles of half finished projects. I know developers prefer working on whatever interests them, but given the resources available to X.org, this approach will not yield a new server or even a fully-competitive desktop based on the old server in the near term. Maybe it is time for X.org to work out a roadmap for all to follow.

This year is the X server’s twenty-first birthday. Started in 1984 out of Project Athena it has served the Unix community well over the years. X is in widespread use and powers most Linux desktops today. The Wikipedia article provides much more detail but two major X innovations are being open source, which enabled cross platform support, and network transparency.

This year is the X server’s twenty-first birthday. Started in 1984 out of Project Athena it has served the Unix community well over the years. X is in widespread use and powers most Linux desktops today. The Wikipedia article provides much more detail but two major X innovations are being open source, which enabled cross platform support, and network transparency.

That’s a lot of acronyms to digest all at once. How about some examples on how the libraries work together:

That’s a lot of acronyms to digest all at once. How about some examples on how the libraries work together: